From a Stalled Map to an Async AWS SDK: Why I Built aws-sdk-http-async

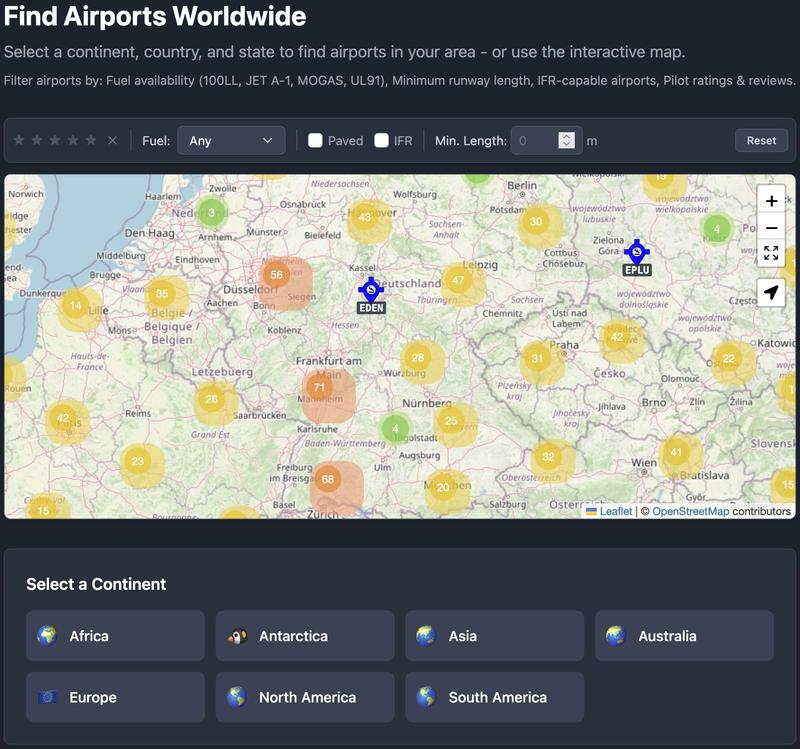

I maintain a side project called Airfield Directory, a Rails app backed by DynamoDB for general aviation pilots.

Because DynamoDB access is essentially all network I/O over HTTPS, it is a good playground to tinker with Ruby fibers before bringing the same approach into other production systems.

Airfield Directory runs on Falcon because I want fiber-based concurrency without the memory overhead of thread-per-request. It should feel fast and smooth under load.

Then the dynamic map happened.

The map view fans out a lot of DynamoDB queries across H3 hexagonal grid cells (a system invented by Uber). Under Falcon, I expected those requests to overlap in fibers. Instead, the reactor stalled and the app behaved like it was single-threaded again: latency spikes, janky scrolling, that “it’s fast until it isn’t” feel.

My first instinct was to do what I do elsewhere (e.g. ruby_llm): wrap the hot

path in Async { ... } and trust the scheduler. It didn’t help. The AWS SDK was

still blocking the reactor.

Trying to explain why the AWS SDK for Ruby fights Falcon

The AWS SDK’s default HTTP transport uses Net::HTTP wrapped in a connection pool. Contrary to what you might expect, Net::HTTP itself is fiber-friendly in Ruby 3.0+—the fiber scheduler hooks into blocking I/O and yields to other fibers automatically.

But in practice, those implicit hooks don’t yield reliably, so the single reactor thread gets blocked often enough that all fibers serialize.

What I actually saw in production:

barrier = Async::Barrier.new(parent: parent_task)

batch_cells.each { |id| barrier.async { dynamodb.query(...) } }

barrier.wait

-> Observed: serialized request time

threads = batch_cells.map { |id| Thread.new { dynamodb.query(...) } }

threads.each(&:join)

-> Observed: 1× single request time (concurrent)

So my current observation/explanation is:

- The SDK’s Net::HTTP transport is synchronous end‑to‑end and relies on implicit scheduler hooks in Net::HTTP/OpenSSL/DNS.

- In our workload, that path blocked the reactor often enough that the Async::Barrier output serialized.

- Threads still overlapped because each call ran in its own OS thread. Swapping the transport to async‑http fixed it because async‑http is fiber‑native end‑to‑end (pool + I/O), so the reactor can actually interleave requests.

I can’t tell you in detail whether it’s gaps in hook coverage, OpenSSL handshakes, DNS resolutions, whatever … In the end, async-http seems to me to be by far the best solution in the whole Async ecosystem. Of course, there might be other side effects I have overlooked, but in the end was/is my real-life observation reproducable.

I eventually fell back to threads just to keep the UI responsive. It worked, but it felt wrong.

I dug through the SDK internals and landed on the same conclusion captured in this issue: https://github.com/aws/aws-sdk-ruby/issues/2621 and an abandoned experimental repo.

So, in a nutshell:

- Threads overlapped because each call ran in its own OS thread.

- Fibers with

Async::Barrierserialized under Falcon. - Swapping the transport to

async-httpfixed it—becauseasync-httpis fiber-native end-to-end (pool + I/O), most likely because the reactor can actually interleave requests.

The solution: aws-sdk-http-async

I built a new HTTP handler as a gem plugin for aws-sdk-core using async-http called aws-sdk-http-async. It aims to preserve the SDK’s semantics (retries, error handling, telemetry, content-length validation), but make the transport fiber-friendly under Falcon.

Key goals:

- Async transport when a reactor exists (Falcon).

- Automatic fallback to Net::HTTP when no reactor exists (rake/console/tests).

- No patches required to make CLI tasks work.

- Safe defaults, explicit config, and clear failure modes for event streams.

Usage (zero-config)

Add it to your Gemfile:

gem 'aws-sdk-http-async'

More information in the repo on Github